Abstract

Screen scraping is the act of programmatically extracting data displayed on the screen, evaluating the data and extracting the information which is required into an understandable format. This technology is widely used by search engines for collecting information from different web sites, integration tools for transferring data between systems, business intelligence for strategic business decisions, meta-search etc. The application of this technology is innumerable, but careless use can result in legal issues. This white paper is a case study on an Internet Screen Scraping solution developed for the regular Web Tasks and extracting information from any Web based systems.

Internet Screen Scraping

Screen scraping is the act of programmatically extracting data displayed on the screen, evaluating the data and extracting the information which is required into an understandable format.

There are a plethora of applications for screen scraping, sometimes this is done simply to display the information in a richer fashion and sometime to send information to another system. A common example for screen scraping is Web search engines. They use spiders which uses screen scraping for extracting and recording key words from HTML.

Screen scraping is programming that translates between legacy application programs (written to communicate with now generally obsolete input/output devices and user interfaces) and new user interfaces so that the logic and data associated with the legacy programs can continue to be used.

In this white paper, we will be discussing only about Internet Screen scraping. Internet screen scraping or Web scraping means programming that translates data between a source web application and a destination application. The source application or the application, which needs to be scraped, should be a ‘scrapable’ application. By ‘scrapable’ we mean, the application that is web based, having HTML front end that can be parsed.

For a scrapable application, the majority of the information that is of interest will be textual and can be parsed. Web applications, which are developed using Developer 2000, Java applets etc, are out of scope, as the content is not parsable. These types of applications require the conventional Screen scraping techniques (using terminal emulation, character reading etc). The scrapable web applications will be developed using the technologies like Java, JSP, ASP, .Net, and other web based technologies. Only thing to keep in mind is that the output pages of the scrapable application, generated by the web server, should be having textual content.

Why Web Scraping?

Internet Screen Scraping can be used in the following areas:

- Application Integration

- Data Mining and Extraction

- Data Migration

- Business Intelligence

- Web Task Automation

- Portal Components

- Meta-Searching

- Archiving

Organizations often have multiple web-based applications for their business process. The communication between these systems will be through manual processes and can be erroneous. Screen scraping can be used to develop bridging software that can integrate different applications, creating one application from different applications, transferring data from one system to another in real time.

- Transferring data between different systems in real time

- Bridging external applications to internal systems

- Building a single interface from many independent systems

Extracting data from secure web sites require authentication, browsing to a particular web page, entering certain parameters etc. The resultant web page containing the relevant information needs to be validated, converted into a different format, inserted into a database or need to be passed to another application. For example few applications of Data Mining and extraction is given below:

- Pulling product data from multiple web sites to create meta-search sites

- Extracting news headlines to use in RSS feeds

- Archiving web pages or resources

Most of the legacy systems provide read only access to the data behind them. For the migration of this data to a more flexible system involves huge amount of costly manual effort and can be error prone. Data should be migrated from legacy systems without manual intervention to minimize these risks.

- Migrating data from legacy applications

- Migrating data from one application to another when upgrading

For success of a company, one has to understand their market and competitors, but information about others' products and services is valuable data that the competitor is not likely to want to share, except through a publicly accessible website.

- Comparing product listings and prices of a competitor

- Extracting news relevant to your marketplace on a routine basis

- Monitoring new products and service launch

Different web tasks like data entry in a form, testing web server’s output, monitoring business transactions etc require manual effort and web navigation. The data that needs to be entered can be from a database or other application. These tasks are tedious and error-prone. These types of activities can be automated using screen scraping. The data can be from different databases or from different other web sites.

- Monitoring of Web portals, complex transactions, transactions that require input from different other data bases or other web applications.

- Web site testing

Developing portal components can be a challenge when there is only a common web-interface between applications. Interfacing different applications require knowledge of the application APIs and if known creating complex middleware. Building the portal components should not affect the parent applications.

- Common look and feel across multiple applications

- Creating portlets (embedding services like weather forecast)

- Combining applications into a single interface

Meta searching is defined as the ability to search multiple web sites and judge the results. Many sites do not provide a consistent interface. For meta searching, the data from each site needs to be extracted and stored in a uniform format. This is required for search results consistency.

- Creating meta-search web portals for price matching.

- Conducting product research

The dynamic nature of web sites results in the contents changing frequently. A snapshot of the web pages is required for archiving purpose. These snapshots should be able to store the page required at the time including the multimedia content.

- Archiving receipts of web transactions

- Building static web sites from dynamic content

- Archiving snapshots of web content for analysing historic data

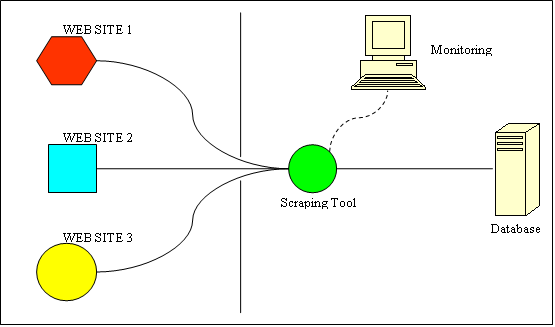

Screen scraping is successfully implemented and widely used in day to day activities in our project . The architecture of the application is illustrated below. The design and development of this solution is indigenously done within the project.

Resources:

http://webdlabs.com/2009/05/bringing-web-scraping-to-wordpress/

0 comments:

Post a Comment