http://code.google.com/speed/page-speed/docs/rules_intro.html

Optimizing caching — keeping your application's data and logic off the network altogether

Minimizing round-trip times — reducing the number of serial request-response cycles

Minimizing request size — reducing upload size

Minimizing payload size — reducing the size of responses, downloads, and cached pages

Optimizing browser rendering — improving the browser's layout of a page

Sep 29, 2009

Web Performance/Profiling Techniques - Best Practices

http://code.google.com/speed/page-speed/docs/rules_intro.html

Optimizing caching — keeping your application's data and logic off the network altogether

Minimizing round-trip times — reducing the number of serial request-response cycles

Minimizing request size — reducing upload size

Minimizing payload size — reducing the size of responses, downloads, and cached pages

Optimizing browser rendering — improving the browser's layout of a page

Validating the Security of Web Based Applications

Introduction

The emergence of Web applications has brought in lot of evolutions in the field of business. An increase in the usage of web applications is directly related to an increase in the number of security attacks invited by them.

To list a few attacks that grabbed the attention are:

This article focuses on some of the Command Execution attacks that invade a web application. It also describes about the ways to detect these attacks and methodologies to validate the security of those web applications. It also touches upon some of the free-wares that are available to detect these threats.

Command Execution Attacks

The Command Execution section covers attacks designed to execute remote commands on the web site. All web sites utilize user-supplied input to fulfill requests. Often these user-supplied data are used to create construct commands resulting in dynamic web page content. If this process is done insecurely, an attacker could alter command execution.

Some of the attacks are as follows:-

The term Buffer Overflow means “generating/providing excess of data than what a buffer or variable can hold”. Since the variable size is defined and hence cannot hold more than the defined size, the excess data will be written in the next data storage area. This will in turn corrupt this storage area also.

Hackers make use of this and inject their code in the form of extra data which gets stored in the storage area. This extra data can get executed and may cause adverse effects. Some of these are Denial of Service, disclosure of confidential information and change to any personal data.

The Buffer Overflow attacks are the common ones in the web based applications. Testing for these attacks is very tricky unless the code is gone through by the tester line by line and looking for data overflow in the code. There are some free-wares available that can be used for testing buffer overflow.

One such tool is called “NTOMax”. This tool will take a text file as input and will try to catch the places where Buffer Overflow could occur. The input file should contain the host name, port, minimum buffer size and maximum limit.

Since Web applications read all types of inputs, there is a high possibility that Buffer Overflow can occur at any point when the web based applications interact and use services and dlls from other non-safe languages like C/C++.

Mitigation methods include using safe string libraries and container abstractions. The safe string functions take the size of the destination buffer as an input parameter, to ensure that the function does not write past the end of the buffer. These functions also null-terminate all output strings, to avoid problems caused by a missing null terminator.

The best way to prevent buffer overflow problems is to validate the input data before using it in the application.

There are two types of Buffer overflow: Stack based and heap based.

2. SQL Injection

This type of hacking uses SQL commands and injects these commands to retrieve the data from application. Other effects of SQL injection attacks include Add/Modify and Delete records/tables from the application.

Hackers use input that would generate invalid SQL query to hack data from the web application. There are two types of SQL injection: (1) Normal SQL injection and (2) Blind SQL Injection.

In Normal SQL Injection attacks, the hacker tries to write an invalid SQL query. On executing this invalid query, the database returns some error messages. Using these error messages, the hacker will be able to understand and determine the database structure and the fields in the tables. By repeating this method, the hacker will get more familiarized with the entire structure of the database.

In Blind SQL Injection attacks, the error messages that get generated due to invalid SQL queries are suppressed by the developers. Hence, the hackers use trial and error methods to construct a valid SQL query in order to retrieve information about the database.

Checking for SQL Injection in the web based applications can be done in the following ways:

An automated web vulnerability scanner scans the entire web site and checks the areas that are prone to attacks. By scanning, it will find the URLs that can be attacked by SQL Injection. One such scanner that is available as a free-ware for limited trial period is Acunetix Web Vulnerability Scanner. Provides you with an immediate and comprehensive website security audit

3. XPATH Injection

Any web application that uses XML to store and retrieve data are prone to attacks by XPATH Injection .Data is stored in XML in a node tree structure. XPATH language is used for retrieving information from the nodes of an XML document. When an application has to retrieve data from the XML document based on user input, it sends an XPATH query which gets executed on the server. Some sample XPATH queries are as follows

As we have seen earlier how SQL injection is done by injecting the attackers query into the application to retrieve hidden information, XPATH injection also uses a similar technique. The attacker injects his own XPATH query and makes the Web Server execute it instead of the one defined in the application. Some of the ways of performing XPATH Injection are Blind and Single Query Attacks

The key to executing a Blind XPATH Injection attack is the ability to “ask” the server a series of true or false “questions” to know the about the data structure and query according to our requirements.

AJAX applications are especially vulnerable because XML fragments are sent to the client for processing. When XPATH query result fragments are processed directly on the client, attacks can be perpetrated with a script-kiddie level of simplicity. The XPATH Single Query Attack method allows an attacker to extract the backend document with a single request to the server. Single Query Attack can run quickly and are much harder to detect as an attack. And unlike blind forms of injection attacks, they are not tedious to perform. There are three properties of XPATH that make attacks against AJAX applications extremely effective: simple joins, error resilience, and easy top level document access.

The best method for preventing XPATH injection vulnerability is by properly validating user input for both type and format. All client-supplied data needs to be cleansed of any characters or strings that could possibly be used maliciously. The best method of doing this is via “white listing”. This is defined as only accepting specific data for specific fields, such as limiting user input to account numbers or account types for those relevant fields, or only accepting integers or letters of the English alphabet for others. Many developers will try to validate input by “black listing” characters, or “escaping” them. Basically, this entails rejecting known bad data, such as a single quotation mark, by placing an “escape” character in front of it so that the item that follows will be treated as a literal value. Stripping quotes or putting backslashes in front of them is not enough, and is not as effective as white listing because it is impossible to know all forms of bad data ahead of time.

A good method of filtering data is by using a default-deny regular expression.

Make it so that you include only the type of characters that you want. For instance, the following regular expression will return only letters and numbers: s/ [^0-9a-zA-Z]//\

Make your filter narrow and specific. Whenever possible, use only numbers. After that, numbers and letters only. If you need to include symbols or punctuation of any kind, make absolutely sure to convert them to HTML substitutes, such as "e; or & gt. For instance, if the user is submitting an e-mail address, allow only the “at” sign, underscore, period, and hyphen in addition to numbers and letters, and allow them only after those characters have been converted to their HTML substitutes.

Some of the freeware tools available to detect XPATH injection are as follows

Paras from SourceForge and WS Digger from Foundstone (McAfee).

Paras

Paras is a Java based HTTP/HTTPS proxy for assessing web application vulnerability. It supports editing/viewing HTTP messages on-the-fly. Other features include spiders, client certificate, and proxy-chaining, intelligent scanning for XSS and SQL injections etc. It is platform independent only requires JRE 1.4. The following are the functions of Paras tool.

Spider

Spider is used to crawl the websites and gather as many URL links as possible. This allows you to have a better understanding of the web site hierarchy tree in a short time before manual navigation. It supports cookie and proxy chaining, which is set at the

field in Option tab. Automatically add URL links to the web site hierarchy tree for later scanning.

Scanner

The scanner function is to scan the server based on the website hierarchy. It can check if there is any server mis configuration. Automatic web scanner may not be able to find out the paths and check if there exist any backup files (.bak) which could expose server information. In order to use this function, you need to navigate the website first. After you logon a website and navigate it, a website hierarchy tree will be built by Paros automatically. Then you can do the following things:

a) If you want to scan all websites on the tree, you can then click on the menu item "Tree" =>"Scan All" to trigger the scanning.

b) If you just want to scan one website on the tree, you can click on that site in the tree panel and click menu item "Tree" => "Scan selected Node" (You can also right click on the tree view and choose the options).

Paras include the following checks:

Note that all the above checks are based on the URLs in the website hierarchy. That means the scanner will check each URL for each vulnerability. Compared with other web scanners which just do a blink scan without website hierarchy, our scanning result is more accurate.

Filter

The use of filters is to detect and alert you the occurrence of certain predefined patterns in HTTP message. If you use filters you do not need to trap every HTTP message and seek for the pattern. You can also use filters to log information in which you are interested. Paros has the following filters:

a) LogCookie

b) LogGetQuery,

c) LogPostQuery,

d) CookieDetectFilter,

e) IfModifiedSinceFilter•

WS Digger Tool

The WS Digger tool from McAfee is more helpful in testing as it has very good features as follows:

Format String Vulnerability got familiarized only early 90’s. Format string Injection vulnerability could take advantage of the format string function to hack the system.

Format string Vulnerability occurs when a user supplied data are used directly as formatting string input for functions. Format string attacks alter the flow of an application by using string formatting library features to access memory space.

Consider a programmer who wants to print out a string or copy it to some buffer. What he means to write is something like:

Printf ("%s", str); but instead he decides that he can save time, effort and 6 bytes of source code by typing: printf (str);

The first argument to printf is a string to be printed. Instead, the string is interpreted by the printf function as a format string. It is scanned for special format characters such as "%d". As formats are encountered, a variable number of argument values are retrieved from the stack. At the least, it should be obvious that an attacker can peek into the memory of the program by printing out these values stored on the stack.

The possible use of Format string attacks are Read data from the stack, Read character strings from the Process’s memory, Write an integer to locations in the process’s memory.

Format string attacks falls in to three categories,

Reading : - Format String Vulnerability reading attacks attempts to read from memory that we do not normally have access to. This technique uses %x format specifies to print sections of memory.

Writing : - Format string Vulnerability writing attacks utilize the %d %u or %x format specifies to overwrite the instruction pointer and force the execution of user supplied shell code. By using %n conversion character, an attacker may write an integer value to any location in memory.

The key to testing format string vulnerabilities is supplying format type specifiers in application input.

For example: - Consider an application that processes a URL string or accepts inputs from form, http://abcd.com/html/en/index.htm, if format string vulnerability exists in one of the routines processing this information, supplying a URL like http://xyzhost.com/html/en/index.htm%n%n%n or passing %n in one of the form fields might crash the application creating a core dump in the hosting folder.

Format string vulnerabilities is more common in web servers, application servers or web applications utilizing C/C++ based code or CGI scripts written in C. In most of these cases an error reporting or logging function like syslog ( ) has been called insecurely.

When testing CGI scripts for format string vulnerabilities, the input parameters can be manipulated to include %x or %n type specifiers. For example a legitimate request like http://hostname/cgi-bin/query.cgi?name=allen&code=45000 can be altered

http://hostname/cgi-bin/query.cgi?name=allen%x.%x.%x&code=45000%x.%x, if a format string vulnerability exists in the routine processing this request, the tester will be able to see stack data being printed out to browser.

There are several tools available to validate Format String Vulnerability in source code. In the case of manual testing, reviews should include more priority to several formatting functions that are specific to the development platform and those functions should be validated for absence of format strings.

ITS4 a tool for statically scanning security critical c and c++ source code for vulnerabilities. ITS4 tool along with source code is available from

http://www.rstcorp.com/its4/.

Conclusion

Apart from the Command Execution attacks discussed, Client Side Attacks also challenge the security of web based applications.

The Client-side Attacks focuses on the abuse or exploitation of a web site’s users. When a user visits a web site, trust is established between the two parties both technologically and psychologically. A user expects web sites they visit to deliver valid content. A user also expects the web site not to attack them during their stay. By leveraging these trust relationship expectations, an attacker may employ several techniques to exploit the user.

In Content Spoofing, technique used is to trick a user into believing that certain content appearing on a web site is legitimate and not from an external source.

In Cross-site Scripting (XSS), the web site is forced to echo attacker-supplied executable code, which loads in a user’s browser.

Web applications are used for variety of functions like booking tickets, shopping, banking which involves lot of money, so they should be made more robust and free from vulnerable attacks. The usage of web applications will be more if they are well secured. The approach of validating the web application should not only focus on the functionality & load testing but should also focus on validating the security of the web applications against vulnerabilities.

References

The emergence of Web applications has brought in lot of evolutions in the field of business. An increase in the usage of web applications is directly related to an increase in the number of security attacks invited by them.

To list a few attacks that grabbed the attention are:

- In 2006, K2 Networks Inc, an operator of Asian games for US market claims to have lost over USD 1 million in a single year due to account compromises. The attacks that were sited were SQL Injection attacks.

- “PetCo plugs credit card leak” – Around 5 lakh credit card numbers were open to hackers with the help of SQL injection attack

This article focuses on some of the Command Execution attacks that invade a web application. It also describes about the ways to detect these attacks and methodologies to validate the security of those web applications. It also touches upon some of the free-wares that are available to detect these threats.

Command Execution Attacks

The Command Execution section covers attacks designed to execute remote commands on the web site. All web sites utilize user-supplied input to fulfill requests. Often these user-supplied data are used to create construct commands resulting in dynamic web page content. If this process is done insecurely, an attacker could alter command execution.

Some of the attacks are as follows:-

- Buffer Overflow Attack - Buffer Overflow exploits are attacks that alter the flow of an application by overwriting parts of memory.

- Format String Attack Format String Attacks alter the flow of an application by using formatting library features to access other memory space.

- LDAP Injection - LDAP Injection is an attack technique used to exploit web sites that construct LDAP statements from user-supplied input.

- OS Commanding - OS Commanding is an attack technique used to exploit web sites by executing Operating System commands through manipulation of application input.

- SQL Injection - SQL Injection is an attack technique used to exploit web sites that construct SQL statements from user-supplied input.

- SSI Injection - SSI Injection (Server-side Include) is a server-side exploit technique that allows an attacker to send code into a web application, which will later be executed locally by the web server.

- XPATH Injection - XPATH Injection is an attack technique used to exploit web sites that construct XPATH queries from user-supplied input.

The term Buffer Overflow means “generating/providing excess of data than what a buffer or variable can hold”. Since the variable size is defined and hence cannot hold more than the defined size, the excess data will be written in the next data storage area. This will in turn corrupt this storage area also.

Hackers make use of this and inject their code in the form of extra data which gets stored in the storage area. This extra data can get executed and may cause adverse effects. Some of these are Denial of Service, disclosure of confidential information and change to any personal data.

The Buffer Overflow attacks are the common ones in the web based applications. Testing for these attacks is very tricky unless the code is gone through by the tester line by line and looking for data overflow in the code. There are some free-wares available that can be used for testing buffer overflow.

One such tool is called “NTOMax”. This tool will take a text file as input and will try to catch the places where Buffer Overflow could occur. The input file should contain the host name, port, minimum buffer size and maximum limit.

Since Web applications read all types of inputs, there is a high possibility that Buffer Overflow can occur at any point when the web based applications interact and use services and dlls from other non-safe languages like C/C++.

Mitigation methods include using safe string libraries and container abstractions. The safe string functions take the size of the destination buffer as an input parameter, to ensure that the function does not write past the end of the buffer. These functions also null-terminate all output strings, to avoid problems caused by a missing null terminator.

The best way to prevent buffer overflow problems is to validate the input data before using it in the application.

There are two types of Buffer overflow: Stack based and heap based.

2. SQL Injection

This type of hacking uses SQL commands and injects these commands to retrieve the data from application. Other effects of SQL injection attacks include Add/Modify and Delete records/tables from the application.

Hackers use input that would generate invalid SQL query to hack data from the web application. There are two types of SQL injection: (1) Normal SQL injection and (2) Blind SQL Injection.

In Normal SQL Injection attacks, the hacker tries to write an invalid SQL query. On executing this invalid query, the database returns some error messages. Using these error messages, the hacker will be able to understand and determine the database structure and the fields in the tables. By repeating this method, the hacker will get more familiarized with the entire structure of the database.

In Blind SQL Injection attacks, the error messages that get generated due to invalid SQL queries are suppressed by the developers. Hence, the hackers use trial and error methods to construct a valid SQL query in order to retrieve information about the database.

Checking for SQL Injection in the web based applications can be done in the following ways:

- Analyzing the present state of security present by performing a thorough audit of your website and web applications for SQL Injection and other hacking vulnerabilities.

- Making sure that you use coding best practice sanitizing your web applications and all other components of your IT infrastructure.

- Regularly performing a web security audit after each change and addition to your web components.

An automated web vulnerability scanner scans the entire web site and checks the areas that are prone to attacks. By scanning, it will find the URLs that can be attacked by SQL Injection. One such scanner that is available as a free-ware for limited trial period is Acunetix Web Vulnerability Scanner. Provides you with an immediate and comprehensive website security audit

- Ensures your website is secure against web attacks

- Checks for SQL injection, Cross site scripting and other vulnerabilities

- Audits shopping carts, forms, and dynamic content

- Scans all your website and web applications including JavaScript / AJAX applications for security vulnerabilities.

3. XPATH Injection

Any web application that uses XML to store and retrieve data are prone to attacks by XPATH Injection .Data is stored in XML in a node tree structure. XPATH language is used for retrieving information from the nodes of an XML document. When an application has to retrieve data from the XML document based on user input, it sends an XPATH query which gets executed on the server. Some sample XPATH queries are as follows

| / | Selects the root node of the tree |

| /users | Selects all "users" child elements of the root node |

| /users/user[@admin= ‘true’ | Selects all user child elements whose “admin” attribute has the value “true” |

| /* | Selects all elements of the root |

The key to executing a Blind XPATH Injection attack is the ability to “ask” the server a series of true or false “questions” to know the about the data structure and query according to our requirements.

AJAX applications are especially vulnerable because XML fragments are sent to the client for processing. When XPATH query result fragments are processed directly on the client, attacks can be perpetrated with a script-kiddie level of simplicity. The XPATH Single Query Attack method allows an attacker to extract the backend document with a single request to the server. Single Query Attack can run quickly and are much harder to detect as an attack. And unlike blind forms of injection attacks, they are not tedious to perform. There are three properties of XPATH that make attacks against AJAX applications extremely effective: simple joins, error resilience, and easy top level document access.

The best method for preventing XPATH injection vulnerability is by properly validating user input for both type and format. All client-supplied data needs to be cleansed of any characters or strings that could possibly be used maliciously. The best method of doing this is via “white listing”. This is defined as only accepting specific data for specific fields, such as limiting user input to account numbers or account types for those relevant fields, or only accepting integers or letters of the English alphabet for others. Many developers will try to validate input by “black listing” characters, or “escaping” them. Basically, this entails rejecting known bad data, such as a single quotation mark, by placing an “escape” character in front of it so that the item that follows will be treated as a literal value. Stripping quotes or putting backslashes in front of them is not enough, and is not as effective as white listing because it is impossible to know all forms of bad data ahead of time.

A good method of filtering data is by using a default-deny regular expression.

Make it so that you include only the type of characters that you want. For instance, the following regular expression will return only letters and numbers: s/ [^0-9a-zA-Z]//\

Make your filter narrow and specific. Whenever possible, use only numbers. After that, numbers and letters only. If you need to include symbols or punctuation of any kind, make absolutely sure to convert them to HTML substitutes, such as "e; or & gt. For instance, if the user is submitting an e-mail address, allow only the “at” sign, underscore, period, and hyphen in addition to numbers and letters, and allow them only after those characters have been converted to their HTML substitutes.

Some of the freeware tools available to detect XPATH injection are as follows

Paras from SourceForge and WS Digger from Foundstone (McAfee).

Paras

Paras is a Java based HTTP/HTTPS proxy for assessing web application vulnerability. It supports editing/viewing HTTP messages on-the-fly. Other features include spiders, client certificate, and proxy-chaining, intelligent scanning for XSS and SQL injections etc. It is platform independent only requires JRE 1.4. The following are the functions of Paras tool.

Spider

Spider is used to crawl the websites and gather as many URL links as possible. This allows you to have a better understanding of the web site hierarchy tree in a short time before manual navigation. It supports cookie and proxy chaining, which is set at the

field in Option tab. Automatically add URL links to the web site hierarchy tree for later scanning.

Scanner

The scanner function is to scan the server based on the website hierarchy. It can check if there is any server mis configuration. Automatic web scanner may not be able to find out the paths and check if there exist any backup files (.bak) which could expose server information. In order to use this function, you need to navigate the website first. After you logon a website and navigate it, a website hierarchy tree will be built by Paros automatically. Then you can do the following things:

a) If you want to scan all websites on the tree, you can then click on the menu item "Tree" =>"Scan All" to trigger the scanning.

b) If you just want to scan one website on the tree, you can click on that site in the tree panel and click menu item "Tree" => "Scan selected Node" (You can also right click on the tree view and choose the options).

Paras include the following checks:

- HTTP PUT allowed − check if the PUT option is enabled at server directories

- Directory index table − check if the server directories can be browsed.

- Obsolete files existed − check if there exist obsolete files at

- Cross−site scripting − check if Cross−site scripting (XSS) is allowed on the query parameters

Note that all the above checks are based on the URLs in the website hierarchy. That means the scanner will check each URL for each vulnerability. Compared with other web scanners which just do a blink scan without website hierarchy, our scanning result is more accurate.

Filter

The use of filters is to detect and alert you the occurrence of certain predefined patterns in HTTP message. If you use filters you do not need to trap every HTTP message and seek for the pattern. You can also use filters to log information in which you are interested. Paros has the following filters:

a) LogCookie

b) LogGetQuery,

c) LogPostQuery,

d) CookieDetectFilter,

e) IfModifiedSinceFilter•

WS Digger Tool

The WS Digger tool from McAfee is more helpful in testing as it has very good features as follows:

- Built in sample attack plug-ins: SQL injection, cross site scripting, XPATH injection.

- Automated web services discovery: The tool analyzes public and or private UDDI to discover web services related to search strings.

- Automated attack vector discovery: It analyzes the web service to determine potential points of attack.

- Automated and manual exploit testing: It can be used to manually inject malicious data and generate test cases which can be automatically executed through the attack plug-ins.

- HTML reporting: It also reports of the results and test case history for further analysis.

Format String Vulnerability got familiarized only early 90’s. Format string Injection vulnerability could take advantage of the format string function to hack the system.

Format string Vulnerability occurs when a user supplied data are used directly as formatting string input for functions. Format string attacks alter the flow of an application by using string formatting library features to access memory space.

Consider a programmer who wants to print out a string or copy it to some buffer. What he means to write is something like:

Printf ("%s", str); but instead he decides that he can save time, effort and 6 bytes of source code by typing: printf (str);

The first argument to printf is a string to be printed. Instead, the string is interpreted by the printf function as a format string. It is scanned for special format characters such as "%d". As formats are encountered, a variable number of argument values are retrieved from the stack. At the least, it should be obvious that an attacker can peek into the memory of the program by printing out these values stored on the stack.

The possible use of Format string attacks are Read data from the stack, Read character strings from the Process’s memory, Write an integer to locations in the process’s memory.

Format string attacks falls in to three categories,

- Denial of Service

- Reading

- Writing

Reading : - Format String Vulnerability reading attacks attempts to read from memory that we do not normally have access to. This technique uses %x format specifies to print sections of memory.

Writing : - Format string Vulnerability writing attacks utilize the %d %u or %x format specifies to overwrite the instruction pointer and force the execution of user supplied shell code. By using %n conversion character, an attacker may write an integer value to any location in memory.

The key to testing format string vulnerabilities is supplying format type specifiers in application input.

For example: - Consider an application that processes a URL string or accepts inputs from form, http://abcd.com/html/en/index.htm, if format string vulnerability exists in one of the routines processing this information, supplying a URL like http://xyzhost.com/html/en/index.htm%n%n%n or passing %n in one of the form fields might crash the application creating a core dump in the hosting folder.

Format string vulnerabilities is more common in web servers, application servers or web applications utilizing C/C++ based code or CGI scripts written in C. In most of these cases an error reporting or logging function like syslog ( ) has been called insecurely.

When testing CGI scripts for format string vulnerabilities, the input parameters can be manipulated to include %x or %n type specifiers. For example a legitimate request like http://hostname/cgi-bin/query.cgi?name=allen&code=45000 can be altered

http://hostname/cgi-bin/query.cgi?name=allen%x.%x.%x&code=45000%x.%x, if a format string vulnerability exists in the routine processing this request, the tester will be able to see stack data being printed out to browser.

There are several tools available to validate Format String Vulnerability in source code. In the case of manual testing, reviews should include more priority to several formatting functions that are specific to the development platform and those functions should be validated for absence of format strings.

ITS4 a tool for statically scanning security critical c and c++ source code for vulnerabilities. ITS4 tool along with source code is available from

http://www.rstcorp.com/its4/.

Conclusion

Apart from the Command Execution attacks discussed, Client Side Attacks also challenge the security of web based applications.

The Client-side Attacks focuses on the abuse or exploitation of a web site’s users. When a user visits a web site, trust is established between the two parties both technologically and psychologically. A user expects web sites they visit to deliver valid content. A user also expects the web site not to attack them during their stay. By leveraging these trust relationship expectations, an attacker may employ several techniques to exploit the user.

In Content Spoofing, technique used is to trick a user into believing that certain content appearing on a web site is legitimate and not from an external source.

In Cross-site Scripting (XSS), the web site is forced to echo attacker-supplied executable code, which loads in a user’s browser.

Web applications are used for variety of functions like booking tickets, shopping, banking which involves lot of money, so they should be made more robust and free from vulnerable attacks. The usage of web applications will be more if they are well secured. The approach of validating the web application should not only focus on the functionality & load testing but should also focus on validating the security of the web applications against vulnerabilities.

References

Validating the Security of Web Based Applications

Introduction

The emergence of Web applications has brought in lot of evolutions in the field of business. An increase in the usage of web applications is directly related to an increase in the number of security attacks invited by them.

To list a few attacks that grabbed the attention are:

This article focuses on some of the Command Execution attacks that invade a web application. It also describes about the ways to detect these attacks and methodologies to validate the security of those web applications. It also touches upon some of the free-wares that are available to detect these threats.

Command Execution Attacks

The Command Execution section covers attacks designed to execute remote commands on the web site. All web sites utilize user-supplied input to fulfill requests. Often these user-supplied data are used to create construct commands resulting in dynamic web page content. If this process is done insecurely, an attacker could alter command execution.

Some of the attacks are as follows:-

The term Buffer Overflow means “generating/providing excess of data than what a buffer or variable can hold”. Since the variable size is defined and hence cannot hold more than the defined size, the excess data will be written in the next data storage area. This will in turn corrupt this storage area also.

Hackers make use of this and inject their code in the form of extra data which gets stored in the storage area. This extra data can get executed and may cause adverse effects. Some of these are Denial of Service, disclosure of confidential information and change to any personal data.

The Buffer Overflow attacks are the common ones in the web based applications. Testing for these attacks is very tricky unless the code is gone through by the tester line by line and looking for data overflow in the code. There are some free-wares available that can be used for testing buffer overflow.

One such tool is called “NTOMax”. This tool will take a text file as input and will try to catch the places where Buffer Overflow could occur. The input file should contain the host name, port, minimum buffer size and maximum limit.

Since Web applications read all types of inputs, there is a high possibility that Buffer Overflow can occur at any point when the web based applications interact and use services and dlls from other non-safe languages like C/C++.

Mitigation methods include using safe string libraries and container abstractions. The safe string functions take the size of the destination buffer as an input parameter, to ensure that the function does not write past the end of the buffer. These functions also null-terminate all output strings, to avoid problems caused by a missing null terminator.

The best way to prevent buffer overflow problems is to validate the input data before using it in the application.

There are two types of Buffer overflow: Stack based and heap based.

2. SQL Injection

This type of hacking uses SQL commands and injects these commands to retrieve the data from application. Other effects of SQL injection attacks include Add/Modify and Delete records/tables from the application.

Hackers use input that would generate invalid SQL query to hack data from the web application. There are two types of SQL injection: (1) Normal SQL injection and (2) Blind SQL Injection.

In Normal SQL Injection attacks, the hacker tries to write an invalid SQL query. On executing this invalid query, the database returns some error messages. Using these error messages, the hacker will be able to understand and determine the database structure and the fields in the tables. By repeating this method, the hacker will get more familiarized with the entire structure of the database.

In Blind SQL Injection attacks, the error messages that get generated due to invalid SQL queries are suppressed by the developers. Hence, the hackers use trial and error methods to construct a valid SQL query in order to retrieve information about the database.

Checking for SQL Injection in the web based applications can be done in the following ways:

An automated web vulnerability scanner scans the entire web site and checks the areas that are prone to attacks. By scanning, it will find the URLs that can be attacked by SQL Injection. One such scanner that is available as a free-ware for limited trial period is Acunetix Web Vulnerability Scanner. Provides you with an immediate and comprehensive website security audit

3. XPATH Injection

Any web application that uses XML to store and retrieve data are prone to attacks by XPATH Injection .Data is stored in XML in a node tree structure. XPATH language is used for retrieving information from the nodes of an XML document. When an application has to retrieve data from the XML document based on user input, it sends an XPATH query which gets executed on the server. Some sample XPATH queries are as follows

As we have seen earlier how SQL injection is done by injecting the attackers query into the application to retrieve hidden information, XPATH injection also uses a similar technique. The attacker injects his own XPATH query and makes the Web Server execute it instead of the one defined in the application. Some of the ways of performing XPATH Injection are Blind and Single Query Attacks

The key to executing a Blind XPATH Injection attack is the ability to “ask” the server a series of true or false “questions” to know the about the data structure and query according to our requirements.

AJAX applications are especially vulnerable because XML fragments are sent to the client for processing. When XPATH query result fragments are processed directly on the client, attacks can be perpetrated with a script-kiddie level of simplicity. The XPATH Single Query Attack method allows an attacker to extract the backend document with a single request to the server. Single Query Attack can run quickly and are much harder to detect as an attack. And unlike blind forms of injection attacks, they are not tedious to perform. There are three properties of XPATH that make attacks against AJAX applications extremely effective: simple joins, error resilience, and easy top level document access.

The best method for preventing XPATH injection vulnerability is by properly validating user input for both type and format. All client-supplied data needs to be cleansed of any characters or strings that could possibly be used maliciously. The best method of doing this is via “white listing”. This is defined as only accepting specific data for specific fields, such as limiting user input to account numbers or account types for those relevant fields, or only accepting integers or letters of the English alphabet for others. Many developers will try to validate input by “black listing” characters, or “escaping” them. Basically, this entails rejecting known bad data, such as a single quotation mark, by placing an “escape” character in front of it so that the item that follows will be treated as a literal value. Stripping quotes or putting backslashes in front of them is not enough, and is not as effective as white listing because it is impossible to know all forms of bad data ahead of time.

A good method of filtering data is by using a default-deny regular expression.

Make it so that you include only the type of characters that you want. For instance, the following regular expression will return only letters and numbers: s/ [^0-9a-zA-Z]//\

Make your filter narrow and specific. Whenever possible, use only numbers. After that, numbers and letters only. If you need to include symbols or punctuation of any kind, make absolutely sure to convert them to HTML substitutes, such as "e; or & gt. For instance, if the user is submitting an e-mail address, allow only the “at” sign, underscore, period, and hyphen in addition to numbers and letters, and allow them only after those characters have been converted to their HTML substitutes.

Some of the freeware tools available to detect XPATH injection are as follows

Paras from SourceForge and WS Digger from Foundstone (McAfee).

Paras

Paras is a Java based HTTP/HTTPS proxy for assessing web application vulnerability. It supports editing/viewing HTTP messages on-the-fly. Other features include spiders, client certificate, and proxy-chaining, intelligent scanning for XSS and SQL injections etc. It is platform independent only requires JRE 1.4. The following are the functions of Paras tool.

Spider

Spider is used to crawl the websites and gather as many URL links as possible. This allows you to have a better understanding of the web site hierarchy tree in a short time before manual navigation. It supports cookie and proxy chaining, which is set at the

field in Option tab. Automatically add URL links to the web site hierarchy tree for later scanning.

Scanner

The scanner function is to scan the server based on the website hierarchy. It can check if there is any server mis configuration. Automatic web scanner may not be able to find out the paths and check if there exist any backup files (.bak) which could expose server information. In order to use this function, you need to navigate the website first. After you logon a website and navigate it, a website hierarchy tree will be built by Paros automatically. Then you can do the following things:

a) If you want to scan all websites on the tree, you can then click on the menu item "Tree" =>"Scan All" to trigger the scanning.

b) If you just want to scan one website on the tree, you can click on that site in the tree panel and click menu item "Tree" => "Scan selected Node" (You can also right click on the tree view and choose the options).

Paras include the following checks:

Note that all the above checks are based on the URLs in the website hierarchy. That means the scanner will check each URL for each vulnerability. Compared with other web scanners which just do a blink scan without website hierarchy, our scanning result is more accurate.

Filter

The use of filters is to detect and alert you the occurrence of certain predefined patterns in HTTP message. If you use filters you do not need to trap every HTTP message and seek for the pattern. You can also use filters to log information in which you are interested. Paros has the following filters:

a) LogCookie

b) LogGetQuery,

c) LogPostQuery,

d) CookieDetectFilter,

e) IfModifiedSinceFilter•

WS Digger Tool

The WS Digger tool from McAfee is more helpful in testing as it has very good features as follows:

Format String Vulnerability got familiarized only early 90’s. Format string Injection vulnerability could take advantage of the format string function to hack the system.

Format string Vulnerability occurs when a user supplied data are used directly as formatting string input for functions. Format string attacks alter the flow of an application by using string formatting library features to access memory space.

Consider a programmer who wants to print out a string or copy it to some buffer. What he means to write is something like:

Printf ("%s", str); but instead he decides that he can save time, effort and 6 bytes of source code by typing: printf (str);

The first argument to printf is a string to be printed. Instead, the string is interpreted by the printf function as a format string. It is scanned for special format characters such as "%d". As formats are encountered, a variable number of argument values are retrieved from the stack. At the least, it should be obvious that an attacker can peek into the memory of the program by printing out these values stored on the stack.

The possible use of Format string attacks are Read data from the stack, Read character strings from the Process’s memory, Write an integer to locations in the process’s memory.

Format string attacks falls in to three categories,

Reading : - Format String Vulnerability reading attacks attempts to read from memory that we do not normally have access to. This technique uses %x format specifies to print sections of memory.

Writing : - Format string Vulnerability writing attacks utilize the %d %u or %x format specifies to overwrite the instruction pointer and force the execution of user supplied shell code. By using %n conversion character, an attacker may write an integer value to any location in memory.

The key to testing format string vulnerabilities is supplying format type specifiers in application input.

For example: - Consider an application that processes a URL string or accepts inputs from form, http://abcd.com/html/en/index.htm, if format string vulnerability exists in one of the routines processing this information, supplying a URL like http://xyzhost.com/html/en/index.htm%n%n%n or passing %n in one of the form fields might crash the application creating a core dump in the hosting folder.

Format string vulnerabilities is more common in web servers, application servers or web applications utilizing C/C++ based code or CGI scripts written in C. In most of these cases an error reporting or logging function like syslog ( ) has been called insecurely.

When testing CGI scripts for format string vulnerabilities, the input parameters can be manipulated to include %x or %n type specifiers. For example a legitimate request like http://hostname/cgi-bin/query.cgi?name=allen&code=45000 can be altered

http://hostname/cgi-bin/query.cgi?name=allen%x.%x.%x&code=45000%x.%x, if a format string vulnerability exists in the routine processing this request, the tester will be able to see stack data being printed out to browser.

There are several tools available to validate Format String Vulnerability in source code. In the case of manual testing, reviews should include more priority to several formatting functions that are specific to the development platform and those functions should be validated for absence of format strings.

ITS4 a tool for statically scanning security critical c and c++ source code for vulnerabilities. ITS4 tool along with source code is available from

http://www.rstcorp.com/its4/.

Conclusion

Apart from the Command Execution attacks discussed, Client Side Attacks also challenge the security of web based applications.

The Client-side Attacks focuses on the abuse or exploitation of a web site’s users. When a user visits a web site, trust is established between the two parties both technologically and psychologically. A user expects web sites they visit to deliver valid content. A user also expects the web site not to attack them during their stay. By leveraging these trust relationship expectations, an attacker may employ several techniques to exploit the user.

In Content Spoofing, technique used is to trick a user into believing that certain content appearing on a web site is legitimate and not from an external source.

In Cross-site Scripting (XSS), the web site is forced to echo attacker-supplied executable code, which loads in a user’s browser.

Web applications are used for variety of functions like booking tickets, shopping, banking which involves lot of money, so they should be made more robust and free from vulnerable attacks. The usage of web applications will be more if they are well secured. The approach of validating the web application should not only focus on the functionality & load testing but should also focus on validating the security of the web applications against vulnerabilities.

References

The emergence of Web applications has brought in lot of evolutions in the field of business. An increase in the usage of web applications is directly related to an increase in the number of security attacks invited by them.

To list a few attacks that grabbed the attention are:

- In 2006, K2 Networks Inc, an operator of Asian games for US market claims to have lost over USD 1 million in a single year due to account compromises. The attacks that were sited were SQL Injection attacks.

- “PetCo plugs credit card leak” – Around 5 lakh credit card numbers were open to hackers with the help of SQL injection attack

This article focuses on some of the Command Execution attacks that invade a web application. It also describes about the ways to detect these attacks and methodologies to validate the security of those web applications. It also touches upon some of the free-wares that are available to detect these threats.

Command Execution Attacks

The Command Execution section covers attacks designed to execute remote commands on the web site. All web sites utilize user-supplied input to fulfill requests. Often these user-supplied data are used to create construct commands resulting in dynamic web page content. If this process is done insecurely, an attacker could alter command execution.

Some of the attacks are as follows:-

- Buffer Overflow Attack - Buffer Overflow exploits are attacks that alter the flow of an application by overwriting parts of memory.

- Format String Attack Format String Attacks alter the flow of an application by using formatting library features to access other memory space.

- LDAP Injection - LDAP Injection is an attack technique used to exploit web sites that construct LDAP statements from user-supplied input.

- OS Commanding - OS Commanding is an attack technique used to exploit web sites by executing Operating System commands through manipulation of application input.

- SQL Injection - SQL Injection is an attack technique used to exploit web sites that construct SQL statements from user-supplied input.

- SSI Injection - SSI Injection (Server-side Include) is a server-side exploit technique that allows an attacker to send code into a web application, which will later be executed locally by the web server.

- XPATH Injection - XPATH Injection is an attack technique used to exploit web sites that construct XPATH queries from user-supplied input.

The term Buffer Overflow means “generating/providing excess of data than what a buffer or variable can hold”. Since the variable size is defined and hence cannot hold more than the defined size, the excess data will be written in the next data storage area. This will in turn corrupt this storage area also.

Hackers make use of this and inject their code in the form of extra data which gets stored in the storage area. This extra data can get executed and may cause adverse effects. Some of these are Denial of Service, disclosure of confidential information and change to any personal data.

The Buffer Overflow attacks are the common ones in the web based applications. Testing for these attacks is very tricky unless the code is gone through by the tester line by line and looking for data overflow in the code. There are some free-wares available that can be used for testing buffer overflow.

One such tool is called “NTOMax”. This tool will take a text file as input and will try to catch the places where Buffer Overflow could occur. The input file should contain the host name, port, minimum buffer size and maximum limit.

Since Web applications read all types of inputs, there is a high possibility that Buffer Overflow can occur at any point when the web based applications interact and use services and dlls from other non-safe languages like C/C++.

Mitigation methods include using safe string libraries and container abstractions. The safe string functions take the size of the destination buffer as an input parameter, to ensure that the function does not write past the end of the buffer. These functions also null-terminate all output strings, to avoid problems caused by a missing null terminator.

The best way to prevent buffer overflow problems is to validate the input data before using it in the application.

There are two types of Buffer overflow: Stack based and heap based.

2. SQL Injection

This type of hacking uses SQL commands and injects these commands to retrieve the data from application. Other effects of SQL injection attacks include Add/Modify and Delete records/tables from the application.

Hackers use input that would generate invalid SQL query to hack data from the web application. There are two types of SQL injection: (1) Normal SQL injection and (2) Blind SQL Injection.

In Normal SQL Injection attacks, the hacker tries to write an invalid SQL query. On executing this invalid query, the database returns some error messages. Using these error messages, the hacker will be able to understand and determine the database structure and the fields in the tables. By repeating this method, the hacker will get more familiarized with the entire structure of the database.

In Blind SQL Injection attacks, the error messages that get generated due to invalid SQL queries are suppressed by the developers. Hence, the hackers use trial and error methods to construct a valid SQL query in order to retrieve information about the database.

Checking for SQL Injection in the web based applications can be done in the following ways:

- Analyzing the present state of security present by performing a thorough audit of your website and web applications for SQL Injection and other hacking vulnerabilities.

- Making sure that you use coding best practice sanitizing your web applications and all other components of your IT infrastructure.

- Regularly performing a web security audit after each change and addition to your web components.

An automated web vulnerability scanner scans the entire web site and checks the areas that are prone to attacks. By scanning, it will find the URLs that can be attacked by SQL Injection. One such scanner that is available as a free-ware for limited trial period is Acunetix Web Vulnerability Scanner. Provides you with an immediate and comprehensive website security audit

- Ensures your website is secure against web attacks

- Checks for SQL injection, Cross site scripting and other vulnerabilities

- Audits shopping carts, forms, and dynamic content

- Scans all your website and web applications including JavaScript / AJAX applications for security vulnerabilities.

3. XPATH Injection

Any web application that uses XML to store and retrieve data are prone to attacks by XPATH Injection .Data is stored in XML in a node tree structure. XPATH language is used for retrieving information from the nodes of an XML document. When an application has to retrieve data from the XML document based on user input, it sends an XPATH query which gets executed on the server. Some sample XPATH queries are as follows

| / | Selects the root node of the tree |

| /users | Selects all "users" child elements of the root node |

| /users/user[@admin= ‘true’ | Selects all user child elements whose “admin” attribute has the value “true” |

| /* | Selects all elements of the root |

The key to executing a Blind XPATH Injection attack is the ability to “ask” the server a series of true or false “questions” to know the about the data structure and query according to our requirements.

AJAX applications are especially vulnerable because XML fragments are sent to the client for processing. When XPATH query result fragments are processed directly on the client, attacks can be perpetrated with a script-kiddie level of simplicity. The XPATH Single Query Attack method allows an attacker to extract the backend document with a single request to the server. Single Query Attack can run quickly and are much harder to detect as an attack. And unlike blind forms of injection attacks, they are not tedious to perform. There are three properties of XPATH that make attacks against AJAX applications extremely effective: simple joins, error resilience, and easy top level document access.

The best method for preventing XPATH injection vulnerability is by properly validating user input for both type and format. All client-supplied data needs to be cleansed of any characters or strings that could possibly be used maliciously. The best method of doing this is via “white listing”. This is defined as only accepting specific data for specific fields, such as limiting user input to account numbers or account types for those relevant fields, or only accepting integers or letters of the English alphabet for others. Many developers will try to validate input by “black listing” characters, or “escaping” them. Basically, this entails rejecting known bad data, such as a single quotation mark, by placing an “escape” character in front of it so that the item that follows will be treated as a literal value. Stripping quotes or putting backslashes in front of them is not enough, and is not as effective as white listing because it is impossible to know all forms of bad data ahead of time.

A good method of filtering data is by using a default-deny regular expression.

Make it so that you include only the type of characters that you want. For instance, the following regular expression will return only letters and numbers: s/ [^0-9a-zA-Z]//\

Make your filter narrow and specific. Whenever possible, use only numbers. After that, numbers and letters only. If you need to include symbols or punctuation of any kind, make absolutely sure to convert them to HTML substitutes, such as "e; or & gt. For instance, if the user is submitting an e-mail address, allow only the “at” sign, underscore, period, and hyphen in addition to numbers and letters, and allow them only after those characters have been converted to their HTML substitutes.

Some of the freeware tools available to detect XPATH injection are as follows

Paras from SourceForge and WS Digger from Foundstone (McAfee).

Paras

Paras is a Java based HTTP/HTTPS proxy for assessing web application vulnerability. It supports editing/viewing HTTP messages on-the-fly. Other features include spiders, client certificate, and proxy-chaining, intelligent scanning for XSS and SQL injections etc. It is platform independent only requires JRE 1.4. The following are the functions of Paras tool.

Spider

Spider is used to crawl the websites and gather as many URL links as possible. This allows you to have a better understanding of the web site hierarchy tree in a short time before manual navigation. It supports cookie and proxy chaining, which is set at the

field in Option tab. Automatically add URL links to the web site hierarchy tree for later scanning.

Scanner

The scanner function is to scan the server based on the website hierarchy. It can check if there is any server mis configuration. Automatic web scanner may not be able to find out the paths and check if there exist any backup files (.bak) which could expose server information. In order to use this function, you need to navigate the website first. After you logon a website and navigate it, a website hierarchy tree will be built by Paros automatically. Then you can do the following things:

a) If you want to scan all websites on the tree, you can then click on the menu item "Tree" =>"Scan All" to trigger the scanning.

b) If you just want to scan one website on the tree, you can click on that site in the tree panel and click menu item "Tree" => "Scan selected Node" (You can also right click on the tree view and choose the options).

Paras include the following checks:

- HTTP PUT allowed − check if the PUT option is enabled at server directories

- Directory index table − check if the server directories can be browsed.

- Obsolete files existed − check if there exist obsolete files at

- Cross−site scripting − check if Cross−site scripting (XSS) is allowed on the query parameters

Note that all the above checks are based on the URLs in the website hierarchy. That means the scanner will check each URL for each vulnerability. Compared with other web scanners which just do a blink scan without website hierarchy, our scanning result is more accurate.

Filter

The use of filters is to detect and alert you the occurrence of certain predefined patterns in HTTP message. If you use filters you do not need to trap every HTTP message and seek for the pattern. You can also use filters to log information in which you are interested. Paros has the following filters:

a) LogCookie

b) LogGetQuery,

c) LogPostQuery,

d) CookieDetectFilter,

e) IfModifiedSinceFilter•

WS Digger Tool

The WS Digger tool from McAfee is more helpful in testing as it has very good features as follows:

- Built in sample attack plug-ins: SQL injection, cross site scripting, XPATH injection.

- Automated web services discovery: The tool analyzes public and or private UDDI to discover web services related to search strings.

- Automated attack vector discovery: It analyzes the web service to determine potential points of attack.

- Automated and manual exploit testing: It can be used to manually inject malicious data and generate test cases which can be automatically executed through the attack plug-ins.

- HTML reporting: It also reports of the results and test case history for further analysis.

Format String Vulnerability got familiarized only early 90’s. Format string Injection vulnerability could take advantage of the format string function to hack the system.

Format string Vulnerability occurs when a user supplied data are used directly as formatting string input for functions. Format string attacks alter the flow of an application by using string formatting library features to access memory space.

Consider a programmer who wants to print out a string or copy it to some buffer. What he means to write is something like:

Printf ("%s", str); but instead he decides that he can save time, effort and 6 bytes of source code by typing: printf (str);

The first argument to printf is a string to be printed. Instead, the string is interpreted by the printf function as a format string. It is scanned for special format characters such as "%d". As formats are encountered, a variable number of argument values are retrieved from the stack. At the least, it should be obvious that an attacker can peek into the memory of the program by printing out these values stored on the stack.

The possible use of Format string attacks are Read data from the stack, Read character strings from the Process’s memory, Write an integer to locations in the process’s memory.

Format string attacks falls in to three categories,

- Denial of Service

- Reading

- Writing

Reading : - Format String Vulnerability reading attacks attempts to read from memory that we do not normally have access to. This technique uses %x format specifies to print sections of memory.

Writing : - Format string Vulnerability writing attacks utilize the %d %u or %x format specifies to overwrite the instruction pointer and force the execution of user supplied shell code. By using %n conversion character, an attacker may write an integer value to any location in memory.

The key to testing format string vulnerabilities is supplying format type specifiers in application input.

For example: - Consider an application that processes a URL string or accepts inputs from form, http://abcd.com/html/en/index.htm, if format string vulnerability exists in one of the routines processing this information, supplying a URL like http://xyzhost.com/html/en/index.htm%n%n%n or passing %n in one of the form fields might crash the application creating a core dump in the hosting folder.

Format string vulnerabilities is more common in web servers, application servers or web applications utilizing C/C++ based code or CGI scripts written in C. In most of these cases an error reporting or logging function like syslog ( ) has been called insecurely.

When testing CGI scripts for format string vulnerabilities, the input parameters can be manipulated to include %x or %n type specifiers. For example a legitimate request like http://hostname/cgi-bin/query.cgi?name=allen&code=45000 can be altered

http://hostname/cgi-bin/query.cgi?name=allen%x.%x.%x&code=45000%x.%x, if a format string vulnerability exists in the routine processing this request, the tester will be able to see stack data being printed out to browser.

There are several tools available to validate Format String Vulnerability in source code. In the case of manual testing, reviews should include more priority to several formatting functions that are specific to the development platform and those functions should be validated for absence of format strings.

ITS4 a tool for statically scanning security critical c and c++ source code for vulnerabilities. ITS4 tool along with source code is available from

http://www.rstcorp.com/its4/.

Conclusion

Apart from the Command Execution attacks discussed, Client Side Attacks also challenge the security of web based applications.

The Client-side Attacks focuses on the abuse or exploitation of a web site’s users. When a user visits a web site, trust is established between the two parties both technologically and psychologically. A user expects web sites they visit to deliver valid content. A user also expects the web site not to attack them during their stay. By leveraging these trust relationship expectations, an attacker may employ several techniques to exploit the user.

In Content Spoofing, technique used is to trick a user into believing that certain content appearing on a web site is legitimate and not from an external source.

In Cross-site Scripting (XSS), the web site is forced to echo attacker-supplied executable code, which loads in a user’s browser.

Web applications are used for variety of functions like booking tickets, shopping, banking which involves lot of money, so they should be made more robust and free from vulnerable attacks. The usage of web applications will be more if they are well secured. The approach of validating the web application should not only focus on the functionality & load testing but should also focus on validating the security of the web applications against vulnerabilities.

References

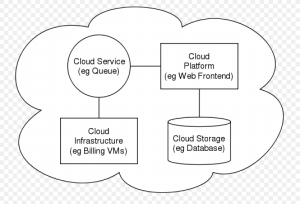

What is Cloud Computing?

What is Cloud Computing?

Cloud Computing is basically Internet based development and making use of computer technology. The Cloud here stands for the Internet and Computing basically refers to the Computer Technology to be used.

It is a style of computing in which typically real time scalable resources are provided as a service over the Internet to users who need not have knowledge of, expertise in, or control over the technology infrastructure that supports them.

This concept incorporates Software as a Service (SaaS), Web 2.0 and other recent well-known technology trends, in which the common theme is reliance on the Internet for satisfying the computing needs of the users.

Companies in Cloud Computing

The companies providing Cloud Computing services are: Amazon, Google, and Yahoo. And few of the companies who have adopted Cloud computing are: General Electric, L’Or’eal and Procter & Gamble.

History of cloud Computing:

The concept of Cloud Computing dates back to 1960 when John McCarthy opined that “computation may someday be organized as a public utility”. Then in early 1990s, this concept was put to commercial use in the form large ATM Networks.

Amazon.com played a key role in the development of cloud computing by modernizing their data centers after the dot-com bubble and, having found that the new cloud architecture resulted in significant internal efficiency improvements, providing access to their systems by way of Amazon Web Services in 2002 on a utility computing basis.

2007 saw increased activity, with Google, IBM, and a number of universities embarking on a large scale cloud computing research project, around the time the term started gaining popularity in the mainstream press. It was a hot topic by mid-2008 and numerous cloud computing events had been scheduled.

In August 2008, Gartner observed that "organizations are switching from company-owned hardware and software assets to per-use service-based models" and that the "projected shift to cloud computing will result in dramatic growth in IT products in some areas and in significant reductions in other areas."

Key Characteristics:

• Customers minimize capital expenditure; this lowers barriers to entry, as infrastructure is owned by the provider and does not need to be purchased for one-time or infrequent intensive computing tasks. Services are typically available to or specifically targeted to retail consumers and small businesses.

• Device and location independence enable users to access systems regardless of their location or what device they are using, e.g., PC, mobile.

• Multi-tenancy enables sharing of resources and costs among a large pool of users, allowing for:

• Performance is monitored and consistent, but can suffer from insufficient bandwidth or high network load.

• Reliability improves through the use of multiple redundant sites, which makes it suitable for business continuity and disaster recovery. Nonetheless, most major cloud computing services have suffered outages and IT and business managers are able to do little when they are affected.

• Scalability meets changing user demands quickly without users having to engineer for peak loads.

• Security typically improves due to centralization of data, increased security-focused resources, etc., but raises concerns about loss of control over certain sensitive data. Security is often as good as or better than traditional systems, in part because providers are able to devote shared resources that most customers cannot afford. Providers typically log accesses, but accessing the audit logs themselves can be difficult or impossible.

• Sustainability comes about through improved resource utilization, more efficient systems, and carbon neutrality. Nonetheless, computers and associated infrastructure are major consumers of energy.

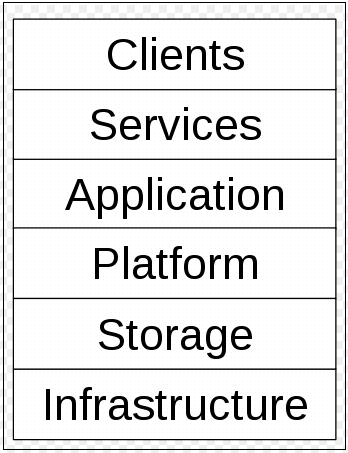

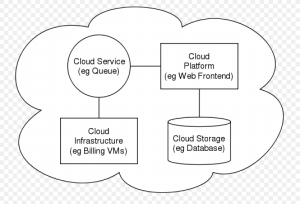

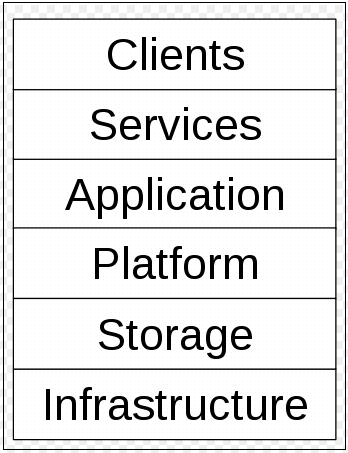

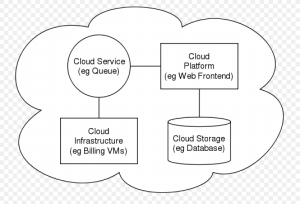

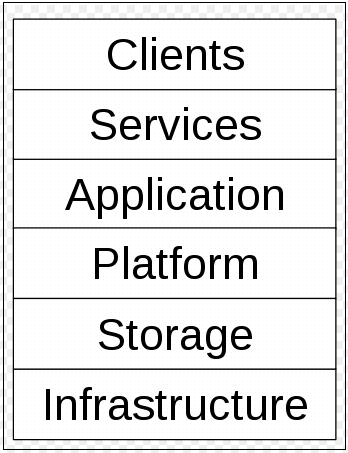

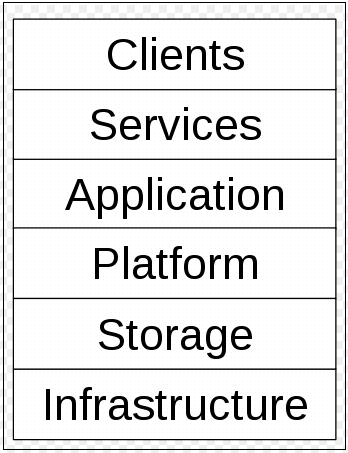

Architecture:

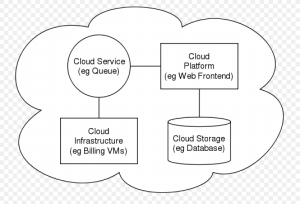

Cloud architecture, the systems architecture of the software systems involved in the delivery of cloud computing, comprises hardware and software designed by a cloud architect who typically works for a cloud integrator. It typically involves multiple cloud components communicating with each other over application programming interfaces, usually web services.

This closely resembles the Unix philosophy of having multiple programs doing one thing well and working together over universal interfaces. Complexity is controlled and the resulting systems are more manageable than their monolithic counterparts.

Cloud architecture extends to the client, where web browsers and/or software applications access cloud applications.

Cloud storage architecture is loosely coupled, where metadata operations are centralized enabling the data nodes to scale into the hundreds, each independently delivering data to applications or users.

Components:

Application

A cloud application leverages the Cloud in software architecture, often eliminating the need to install and run the application on the customer's own computer, thus alleviating the burden of software maintenance, ongoing operation, and support. For example:

• Peer-to-peer / volunteer computing (Bittorrent, BOINC Projects, Skype)

• Web application (Facebook)

• Software as a service (Google Apps, Salesforce)

• Software plus services (Microsoft Online Services)

Client

A cloud client consists of computer hardware and/or computer software which relies on The Cloud for application delivery, or which is specifically designed for delivery of cloud services, and which in either case is essentially useless without it. For example:

• Mobile (Android, iPhone, Windows Mobile)

• Thin client (CherryPal, Zonbu, gOS-based systems)

• Thick client / Web browser (Google Chrome, Mozilla Firefox)

Infrastructure

Cloud infrastructure, such as Infrastructure as a service, is the delivery of computer infrastructure, typically a platform virtualization environment, as a service. For example:

• Full virtualization (GoGrid, Skytap)

• Grid computing (Sun Grid)

• Management (RightScale)

• Compute (Amazon Elastic Compute Cloud)

Platform

A cloud platform, such as Platform as a service, the delivery of a computing platform, and/or solution stack as a service facilitates deployment of applications without the cost and complexity of buying and managing the underlying hardware and software layers. For example:

• Web application frameworks

• Proprietary (Azure, Force.com)

Service

A cloud service, such as Web Service, is “software system[s] designed to support interoperable machine-to-machine interaction over a network” which may be accessed by other cloud computing components, software, e.g., Software plus services, or end users directly. For example:

• Identity (OAuth, OpenID)

• Integration (Amazon Simple Queue Service)

• Payments (Amazon Flexible Payments Service, Google Checkout, PayPal)

• Mapping (Google Maps, Yahoo! Maps)

• Search (Alexa, Google Custom Search, Yahoo! BOSS)

• Others (Amazon Mechanical Turk)

Storage

Cloud storage involves the delivery of data storage as a service, including database-like services, often billed on a utility computing basis, e.g., per gigabyte per month. For example:

• Database (Amazon SimpleDB, Google App Engine's BigTable datastore)

• Network attached storage (MobileMe iDisk, CTERA Cloud Attached Storage, Nirvanix CloudNAS, IBM Scale out File Servicessofs )

• Synchronisation (Live Mesh Live Desktop component, MobileMe push functions)

• Web service (Amazon Simple Storage Service, Nirvanix SDN)

Roles:

Provider

A cloud computing provider or cloud computing service provider owns and operates live cloud computing systems to deliver service to third parties. Usually this requires significant resources and expertise in building and managing next-generation data centers. Some organisations realise a subset of the benefits of cloud computing by becoming "internal" cloud providers and servicing themselves, although they do not benefit from the same economies of scale and still have to engineer for peak loads. The barrier to entry is also significantly higher with capital expenditure required and billing and management creates some overhead. Nonetheless, significant operational efficiency and agility advantages can be realised, even by small organisations, and server consolidation and virtualization rollouts are already well underway. Amazon.com was the first such provider, modernising its data centers which, like most computer networks, were using as little as 10% of its capacity at any one time just to leave room for occasional spikes. This allowed small, fast-moving groups to add new features faster and easier, and they went on to open it up to outsiders as Amazon Web Services in 2002 on a utility computing basis.

User

A user is a consumer of cloud computing. The privacy of users in cloud computing has become of increasing concern. The rights of users are also an issue, which is being addressed via a community effort to create a bill of rights.

Vendor

A vendor sells products and services that facilitate the delivery, adoption and use of cloud computing. For example:

• Computer hardware (Dell, HP, IBM, Sun Microsystems)

1. SaaS